I’ve been saying it—and I’ve been saying it ever since $1.2 trillion was wiped off the U.S. stock market after the release of DeepSeek R1: compute is king, and compute will be absolutely essential to AI development.

Tonight proves it.

In just 122 days, Elon Musk’s AI company built the world’s largest AI-training cluster, secretly doubling its GPU count from 100,000 to 200,000 NVIDIA H100s.

Let that sink in.

Necessity Is the Mother of Invention

Elon Musk admitted that setting up their own data center wasn’t the plan.

They only did it because, when they approached existing data centers, they were told it would take 18–24 months to get up and running.

That timeline was a non-starter.

So they built it themselves—overcoming massive infrastructure challenges in record time.

The first problem? Power.

They didn’t have enough to run 200,000 GPUs at the old factory they had converted to house the NVIDIA hardware. (Building a new data center from scratch wasn’t an option.)

The solution? Stacks and stacks of generators—and even Tesla batteries to store and distribute power efficiently.

Then came the next challenge: cooling.

Managing 200,000 GPUs generates an enormous amount of heat. XAI had to design an advanced water-cooling system to prevent overheating, all while ensuring that every component of the hardware stack operated in perfect synchronization—before they could even begin training AI models.

An unbelievable feat of engineering. Of course, Elon had a secret weapon: the best engineers from SpaceX and Tesla—a competitive advantage that shouldn’t be understated.

Necessity is the mother of invention. (I’m sure getting one over on Sam Altman was another huge motivating factor.)

The Industrialization of Intelligence

This is significant because, as I’ve been arguing here, creating intelligence as a commodity is an industrial process.

It requires extremely capital-intensive, complicated infrastructure.

And now, XAI is gaining a distinct advantage over other frontier model builders like OpenAI and Anthropic—because it is going full-stack.

By building out its own colossal data center (it’s actually called ‘Colossus’), XAI has solved some of the hardest problems in AI scaling:

Securing power

Cooling massive GPU clusters

Housing and networking the compute infrastructure

Coordinating all these systems to scale efficiently

Unlike OpenAI or Anthropic, XAI actually owns its AI infrastructure—and has a blueprint on how to run it.

Its engineers are working right next to their own compute—not renting it from Microsoft or Amazon. (They could even hear their GPUs humming in response to especially hard reasoning tasks.)

Big Boy Billionaire Showdown

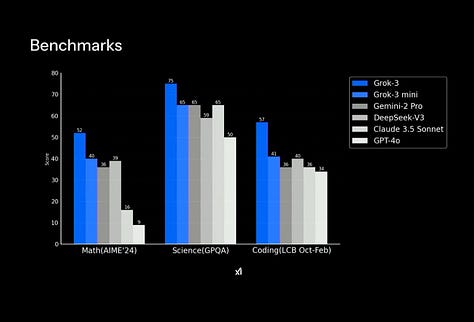

Early benchmarks show that Grok 3 (still in beta) is very good.

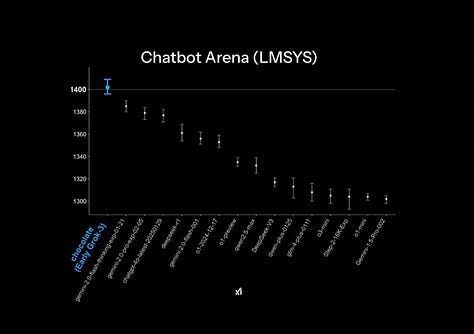

It’s currently ranked first on Chatbot Arena—with a big gap over competitors.

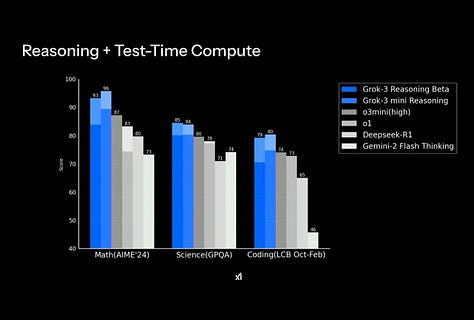

It also scores impressively on pre-training and reasoning evaluations, outperforming models like DeepSeek, GPT-4, DeepSeek R1, and O3 Mini.

And it’s still improving.

There are several major takeaways here:

XAI caught up at breakneck speed. In just 18 months, it has matched the frontier-level models. Maybe there is ‘no moat’ for model developers… but the only competition is from those who can brute-force billions of dollars on infrastructure spend.

Engineering and infrastructure were fundamental to making this happen.

This wasn’t just about better algorithms—it was about solving the real-world industrial challenges of AI development.Money talks. XAI didn’t just buy 200,000 NVIDIA H100 GPUs—they had to build an entire ecosystem to support them. That costs a s* ton of money. If the raw GPU cost alone was around $5 billion, the true cost of building this competitive AI compute stack? Closer to $8–9 billion. And this is just for one cluster.

This reinforces what I have been arguing more broadly about AI development globally: only well-resourced players (the big boy billionaire club?) who can invest in and scale AI infrastructure will remain dominant.

They will also be the players who produce and distribute the most valuable commodity: compute—something everyone will need, including open-source builders.

Big Intelligence Requires Big Compute

Think about it like this: while there are clever engineering optimizations to compress models so they can run more efficiently (i.e., DeepSeek R1), the real breakthroughs in reasoning will demand more compute—both for training and reinforcement learning.

This explains why Anthropic CEO Dario Amodei has been talking about how the next generation of models will require millions of GPUs for training—not just hundreds of thousands.

In his words:

"I think if we go to $10 or $100 billion, and I think that will happen in 2025, 2026, maybe 2027... then I think there is a good chance that by that time we'll be able to get models that are better than most humans at most things."

No wonder Elon Musk says they’ve already started work on their next supercluster, which will require five times more power than Colossus.

And if intelligence is truly infinite, then perhaps the only real limit to AI’s progression is physics itself—as in, at what point will we physically be unable to sustain AI infrastructure?

Stay tuned.

Thank you as well for your excellent review. I really want to ask three questions: 1. are we back to compute? I thought it was only a few weeks ago DeepSeek showed us processor power is not everything (did I not get the memo :)? 2. Can we trust Musk? He has been consistently overselling both his cars, space adventure, social media space and more. He is a marketing man knowing what stories people want to hear. 3. Does the world want to trust their data and decision making to the owner of X and the leader of DOGE? Sales of Telsa are down up to 40% in some European markets January 24 compared to 25.

Thanks for the early morning review — it arrived here in Germany just before breakfast. One thing that “Herr Musk” has proven again and again is that he can assemble teams to solve extreme engineering challenges in record time. Of course, it doesn’t hurt to have semi-infinite deep financial pockets. So when will we see a really good LLM based agent called “Deep Finance”?